Our recent research with smart speaker owners and chatbot users provided a look into what makes a digital assistant succ...

How much AI do you need to build an effective conversation between a human and a computer?

Posted by AnswerLab Research on Jan 2, 2019

This piece is a supplement to our recent report, Elements of a Successful Digital Assistant and How to Apply Them. In this research we explored conversational interface design and provided a look into the key experience dimensions brands must consider when designing digital assistants. Now, we're taking a closer look at the technology behind it to understand the level of AI needed to build effective conversations.

It’s important to have a conceptual understanding of the techniques used to build conversational interfaces to apply useful design and implementation strategies as these technology evolves.

A prevailing misconception is that the effectiveness of human-computer conversation depends on AI automation alone. Quite the contrary, humans are essential to building effective conversations. Conversation design skill sets, applied linguistics, for example, are in high demand. Why? Matter-of-fact questions like ‘What’s the time now?’ are easy for a computer to process, but a question like ‘What do you recommend?’ is hard. AI has a hard time predicting the variances in human logic, context, and emotion behind such a question. Humans need to step in to engineer the logic and write prompts where computers cannot; more importantly, humans can help brands express their own personality through the custom design of words and sound. If it were all left to the computer to automate answers, all digital assistants would be the same, and boring.

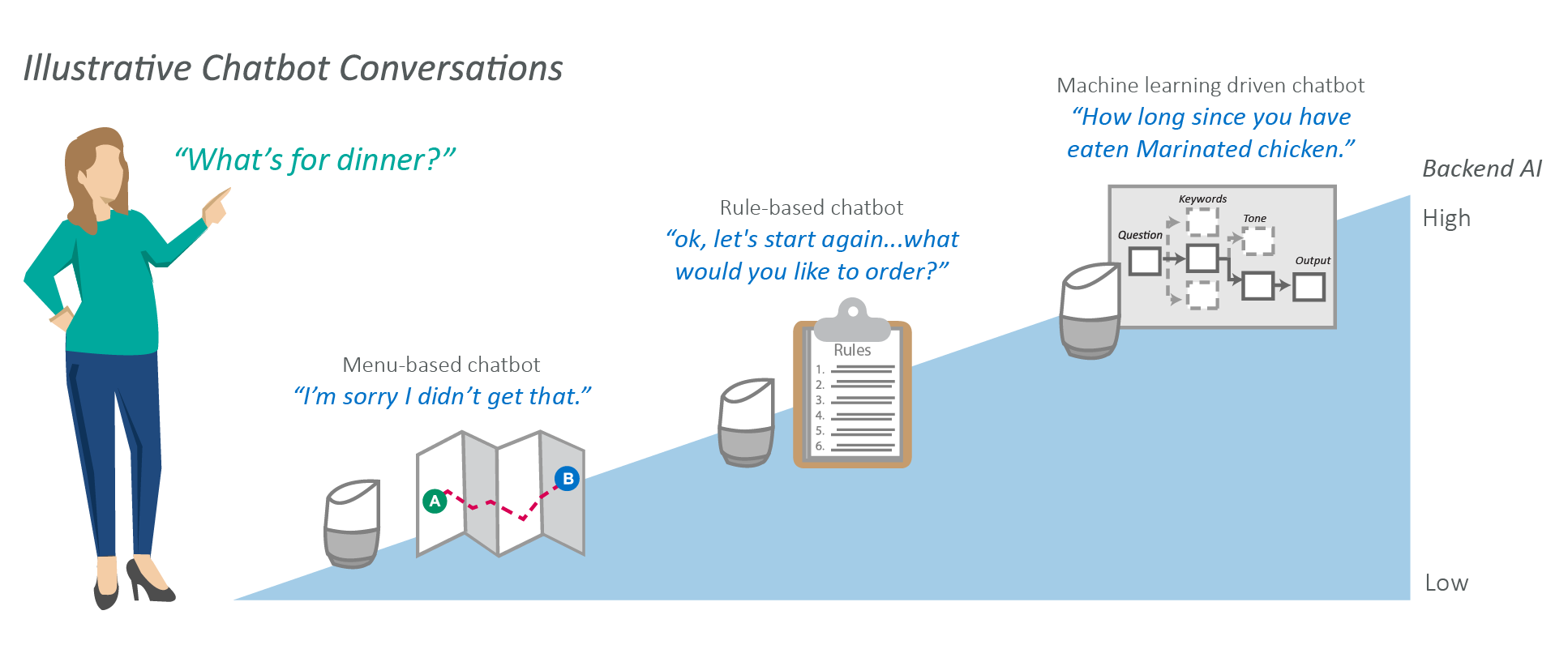

Whether texting to a chatbot or talking to a smart speaker, the processing of information in both cases is the same except the latter involves converting speech to text. To simplify, there are three stages at play when a human says something to a computer. The computer will:

The first two stages refer to the field of natural language understanding (NLU), while the latter refers to natural language generation (NLG). Both stages can be handled by either human, AI, or a hybrid of the two.

AI is quite good at automating the NLU stage with well-expressed technology such as speech recognition and machine learning. The NLG stage is where brands can truly differentiate by designing what is said and how it is said. In this stage, it is too labor-intensive for humans to write every rule and response; it is also too error-prone to have AI generate responses automatically.

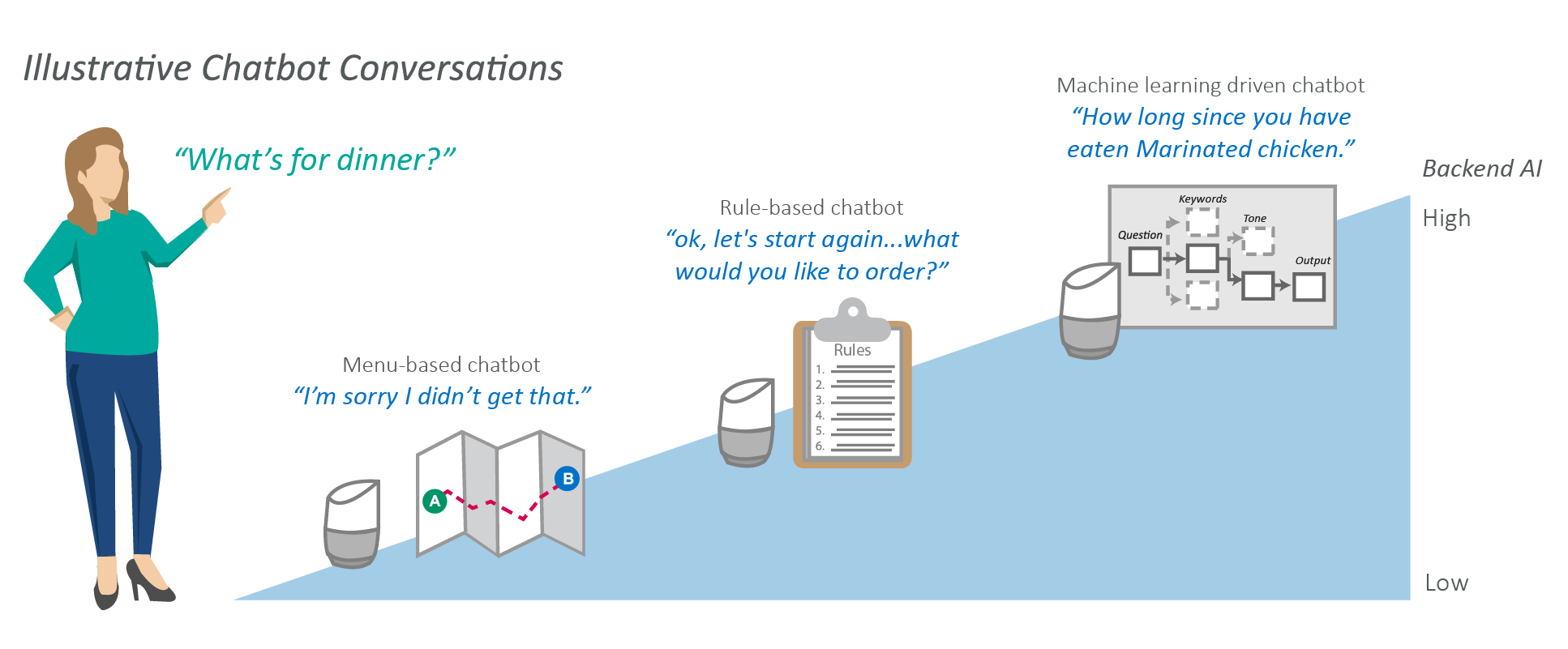

There are three development techniques for today’s digital assistants.

01 Machine learning

Machine learning is particularly effective for analyzing user intent when the questions are freeform. It is also essential to generalist type assistants that are designed to answer a wide range of questions with a large knowledge base (e.g,. Hound, Cortana, Alexa, Google Assistant). It takes a lot of actual user data to train the machine learning algorithms to infer the meaning behind speech automatically. For example, consider the challenge of having a machine identify health problems based on user descriptions of symptoms. To train the algorithms, a machine’s designer might select 100 types of data inputs, 20000 health records, while looking at what users type or speak to the system in real time, manually labeling and updating the data (i.e., sorting keywords under disease types). The decisions behind these numbers and the types of data inputs is part of the art of designing machine learning systems.

“It does not do travel.” - David, Participant

02 Rule-based methods

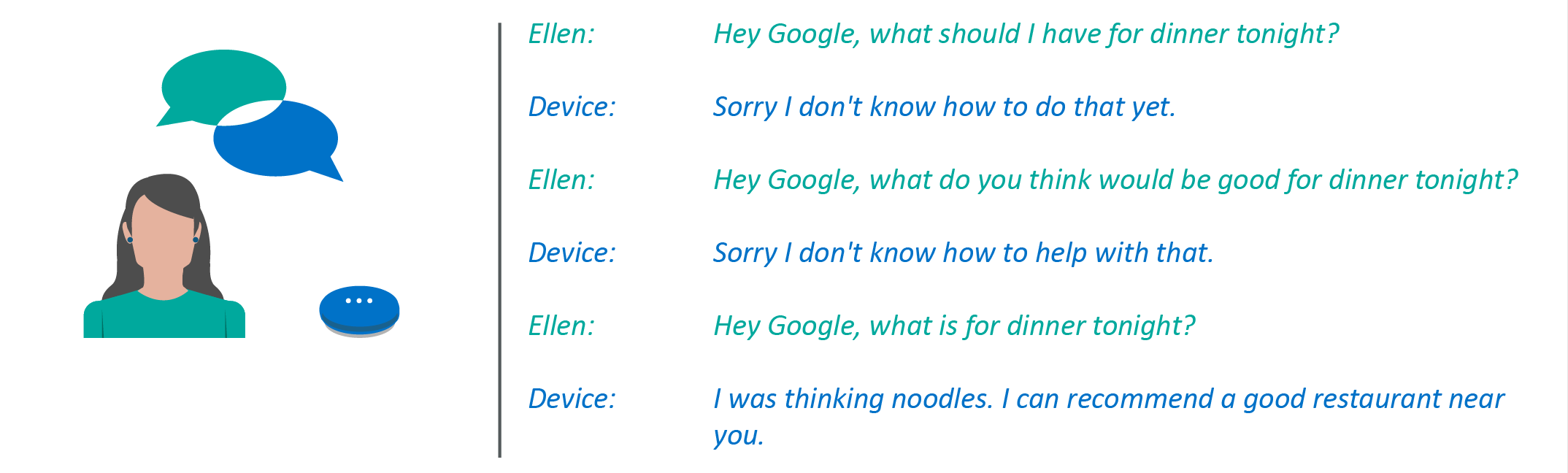

Simpler techniques like pattern-matching or rule-based method can work if the assistant is meant to be a specialist as opposed to a generalist. Specialists perform a list of well-defined tasks in a finite number of topics. Often, rule-based AI is effective if the number of ways that a user can express an intent is relatively predictable. For example, for the intent of ‘booking a flight to Shanghai,’ one might say ‘I need a flight to Shanghai’ while someone else might say ‘I want to fly to Shanghai.’ That may be it. In contrast, there are more ways to ask for something even as simple as a dinner recommendation, than asking for a flight.

“You can see just the slight wording difference means I get an answer

versus I don’t. I have to speak in a specific way” - Ellen, Participant

03 Menu-based technique

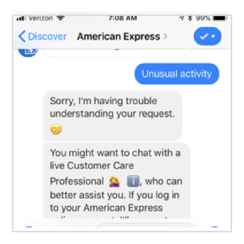

A menu-based technique uses the least amount of AI. Many Facebook Messenger bots, for example, are menu-based. In this case, brands show multiple options in a vertical or horizontal list for the users to select from and give pre-set answers. This method only works well when the chatbot is meant to be just an additional user interface. A user can query a menu-based chatbot by choosing from pre-set menu options like ‘What’s my balance?’ Little to no flexibility is possible with the way a user asks a question. In the example on the right, the American Express Messenger bot fails to understand the user's request.

Remember when the waiter at the restaurant reads you today’s specials and you can’t remember any of them in the end, nor know if you can barge in? Porting menu-based chatbot design directly to a voice assistant doesn’t work well because seeing a menu is almost always easier than hearing a menu. If the answer involves a list over voice, the voice assistant needs to set expectations for what’s possible, what’s about to come, and guide the user to get there. Alexa Voice Design Guide suggests giving between two and five options and using brevity, pacing, and arrangements when listing options in a voice response.

A menu-based assistant is incapable of extracting intent by definition. Rule-based and machine learning-’ driven assistants can extract intent from flexible commands, with machine learning being the technique that has the most flexibility and allows for context awareness. The more flexible and context-aware a digital assistant gets, the more useful it becomes for the user. This doesn’t immediately make machine learning the best choice for creating effective conversations. For example, if the job of the digital assistant is to reorder the last pizza you bought, maybe AI is overkill. Also, use rule-based method for greater control over exactly what the digital assistant will do.

How much AI should be involved depends on the job to be done. Brands have a number of levers to pull in designing digital assistants. They can control their approach, the software they use, and the knowledge base that responses are drawn from. Regardless, building effective conversations between digital assistants and end users is increasingly more of a design problem than a technical problem.

Ultimately, the technology you use to build your digital assistant is a means to an end. Your users will pass judgment based on their experience, not what drives it behind the scenes. Make sure you’re meeting their expectations.

Learn which design elements are critical to the success of digital assistants in our new report: Elements of a Successful Digital Assistant and How to Apply Them.

AnswerLab Research

The AnswerLab research team collaborates on articles to bring you the latest UX trends and best practices.related insights

Get the insights newsletter

Unlock business growth with insights from our monthly newsletter. Join an exclusive community of UX, CX, and Product leaders who leverage actionable resources to create impactful brand experiences.