As more clients come to us for our expertise in conversational interfaces and other AI-powered products, we’ve been reco...

Testing, Testing, 1-2-3: Conducting Remote Research with Voice User Interfaces

Posted by Ben Hoopes on Sep 15, 2020

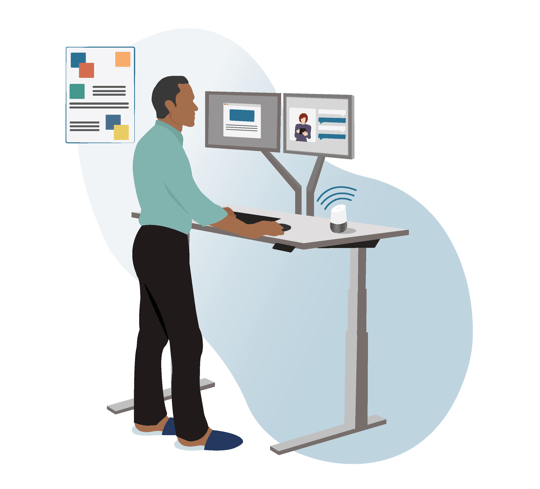

Voice user interfaces (VUIs) and smart speakers have become a standard part of everyday life for many. According to eMarketer, more than a third of the US population will use a voice assistant monthly this year. Even further, the pandemic has caused many consumers to rely on digital channels to interact with the brands they love. Customers are flocking to digital and omnichannel experiences while staying at home, and many intend to keep it that way long term. Conversational interfaces can help streamline those interactions and provide a digital way for your customers to connect with your brand.

Often, user research with VUIs is conducted in person, with a researcher, a participant, and a device all in the same room due to the technical constraints of this technology. However, with the risks of COVID-19, in-person testing isn’t as feasible and researchers must get creative to study these platforms. As we’ve shifted our research remotely, we’ve discovered some ways to help mitigate the complications of voice testing remotely. Here are some strategies for success:

Testing, Testing, 1-2-3

One exciting facet of remote VUI testing is that a participant can interact with a VUI in a few ways. They can use their own device in their home, a device in the researcher’s location over video conferencing tools, or even a low fidelity prototype with Wizard of Oz Testing.

As with any study, it’s crucial to walk through every aspect of a session ahead of time to keep an eye out for potential pitfalls. If your participants do not have their own voice assistant at home, they can interact with a VUI in the researcher’s location simply by communicating with it through their computer’s microphone and speaker over video. This removes any prototype sharing needs, ensures a consistent experience across participants, and can simplify recruiting requirements as well.

However, with more devices comes more risk. With multiple people interacting with multiple microphones and speakers over video conferencing software, the threat of the dreaded feedback loop echo (also known as the crescendoing banshee death shriek we all know) is omnipresent.

To keep this risk to a minimum, it’s important to test with a willing guinea pig colleague at low volumes. During testing, make sure:

- The participant can hear the device clearly over the video call (if it’s not in their home)

- The device can hear the participant

- The video conferencing software you choose can pick up all the audio and video interactions necessary

- The participant can see the device so they can react to any visual cues the device might offer. If interacting with a conversational AI app on a mobile device, the moderator can join the meeting from that device and share the mobile device’s screen. If the device is separate, aim to set up a camera that can display a clear view of the device to the participant.

In addition to working through sound and video challenges, there are often logistical challenges related to the basic setup of the devices and software. Walk through every step of the setup process in advance. Ask yourself:

In addition to working through sound and video challenges, there are often logistical challenges related to the basic setup of the devices and software. Walk through every step of the setup process in advance. Ask yourself:

- Does the device and/or app require someone to sign in? If so, whose credentials will be used? Should the participant sign in ahead of time to avoid sharing any private or personally identifiable information (PII)?

- Are any sign-ins to third party apps necessary to test the full experience?

- Does the device require voice recognition for some or all tasks (meaning the task will only work for users who have created a voice profile)?

For lower fidelity prototypes, Wizard of Oz testing is a great candidate for remote research. In this form of concept testing, the researcher takes on the role of the device, responding to participant inquiries directly or using a Text-to-Speech generator to communicate how the device would respond within the session. Because this does not require a fully functioning device or sophisticated prototype, you’re able to reduce any technology-sharing concerns and minimize potential time wasted on setting up the speaker or the device misunderstanding a participant’s request. Learn more about how to approach Wizard of Oz testing.

For participants, permission is paramount

With conversational AI, it is difficult to predict what an AI algorithm will hear, or think it has heard during an interview. As a result, there is no telling what its response might be.

To ensure the participant knows what to expect, prior to the study, our Research Ops team communicates the following to the participant:

- Which of their personal devices they should be prepared to use in their location

- What devices they should expect to interact with remotely in the researcher’s location

- If they are required to set up any voice profiles or sign-in to any services or apps

- Any chance of their private data or settings being surfaced during the session

One way to simplify some of this preparation is by having participants interact with a device that is physically at the researcher’s location. Using a test account, rather than the participant’s account, removes the risk of personal data being shared by the VUI.

Permission considerations can be nuanced and we never want to reveal personal data or PII unnecessarily. Thinking through these aspects may require a thorough walkthrough of the test plan earlier than usual, before any recruiting kicks off.

Keep ethics and inclusion top of mind

The risk of an algorithm misunderstanding (and ultimately excluding a user as a result) may be greater for minority communities, since they are less likely to have participated in the design of the technology. Many of us have experienced or heard stories about how VUIs often misunderstand users with accents or speech impediments, or those who use “non-standard” English. Learn more about why this happens.

As always with rapidly emerging technologies, and particularly with AI, it is important to keep an eye out for ways that the technology could negatively affect already marginalized groups. This challenge can be amplified with remote research, since it can be more difficult for the researcher to read the participant’s mood and non-verbal cues over video.

Frequent temperature checks are especially important for uncovering negative side effects of technology during remote sessions. Designate moments at each stage of the interview for the moderator to ask participants:

- How they are feeling

- What impact that feeling is having on them in the moment

- What impact they would expect this experience to have on them if they were interacting with the VUI without the researcher present

Since moderators cannot predict all of the effects a technology might have on a participant, it is always good practice to ask an open ended question at the end of a session, giving the participant the floor to share any additional concerns. For example, “What else, if anything, do you feel is important to consider about this concept that we have not discussed today?” You might discover new insights that would never have surfaced otherwise.

Remote testing has some silver linings

Though remote VUI research brings a unique set of challenges, it also has some hidden benefits. In some ways, the researcher not being in the same physical location as the participant makes the testing more realistic. Without a doubt, it more closely mimics many participants’ natural experience with VUIs: in their typical environment, speaking to a device. It also provides access to a greater swath of participants who may not normally make it into your lab, allowing for a potentially more inclusive recruit.

Use this to your advantage when designing your study to mimic a real life scenario and let the participant know they should feel comfortable to communicate with the VUI as they normally would at home. You might ask them to pretend you’re not even there.

...

Voice user interfaces provide immense opportunities to streamline the way your customers interact with your brand, but there’s immense risk if you get it wrong. Turning to remote research and shifting your moderation techniques accordingly is critical to keeping up with customer needs and increasing the probability that you get it right.

The job of VUIs is to convert spoken word into lines of code that it can read, find an answer, and respond to the user. It’s the researcher’s job to pose a question, read between the lines, and speak for the user.

Want to hear more about this topic? Watch our webinar recording: Is This Thing On? How to Test Voice Assistants Remotely.

Learn more about how we approach research with conversational interfaces and voice assistants.

Ben Hoopes

Ben Hoopes, a member of our AnswerLab Alumni, led research as a Principal UX Researcher at AnswerLab, helping answer our clients’ strategic business questions and create experiences people love. He is passionate about the power of UX research to shine a light on unseen paths forward.related insights

Get the insights newsletter

Unlock business growth with insights from our monthly newsletter. Join an exclusive community of UX, CX, and Product leaders who leverage actionable resources to create impactful brand experiences.